- Deep learning is a machine learning category focusing on the use of neural networks to process information

- As with most AI, it is incredibly maths heavy with linear algebra, multivariant calculus and statistics playing a significant part in the inner workings

Artificial Neural Networks (ANNs)

-

ANNs give machines the ability to process data similar to how the human brain makes decisions or take actions based on the data

-

While there’s still more to develop before machines have similar imaginations and reasoning power as humans, ANNs help machines complete and learn from the tasks they perform

-

They are the building blocks of some ML systems using supervised learning

- Deep learning is one application which relies heavily on ANNs

-

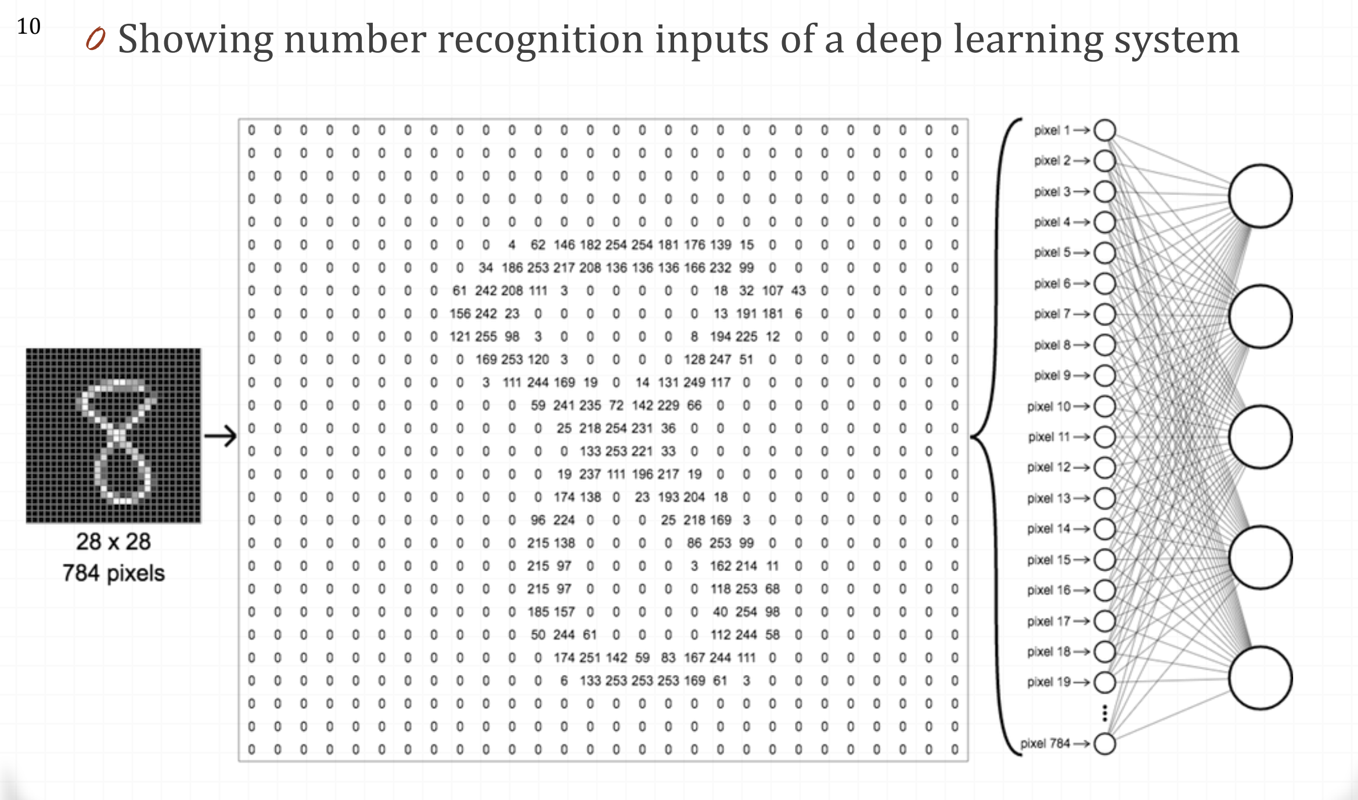

The key to working with an ANN is to quantise the inputs to numerical datum points

-

This is an easy task with most metrics, as much of what we measure is numerical. E.g. age, size, money, energy, etc.

- Sequential data is key (being broken up into sequences such as sentences to words, speech phoemes)

-

How do you measure passion? Sarcasm? How could you take in a page of poetry and predict how it will finish? composing music

-

ANNs are not always the best method of implementing a LM system, as sometimes something cannot be measured in this way

The Human Brain

- The human brain is a complex network containing around 90 billion cells called neurons that communicate with each other via connections called synapses

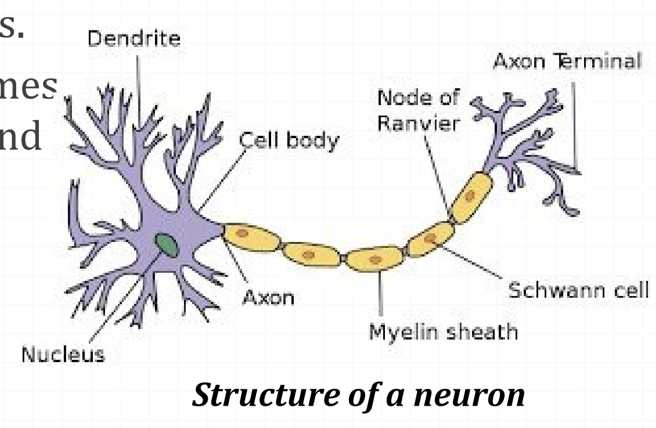

- Neurons are the fundamental computational units of the brain which contains Dendrites, Axons, Synapses, etc.

- The human brain possesses about 1 quadrillionn neurons and the connection that wire them together are known as synapses

- Dendrites act as a signal receiver whereas axon acts as a transmitter of signals to and from other neurons

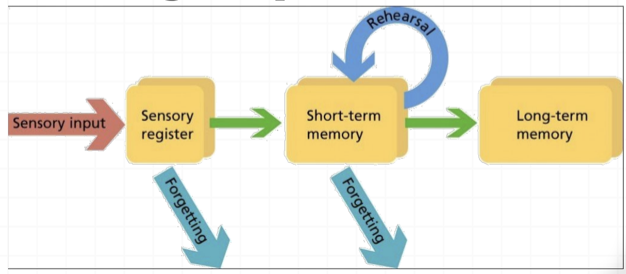

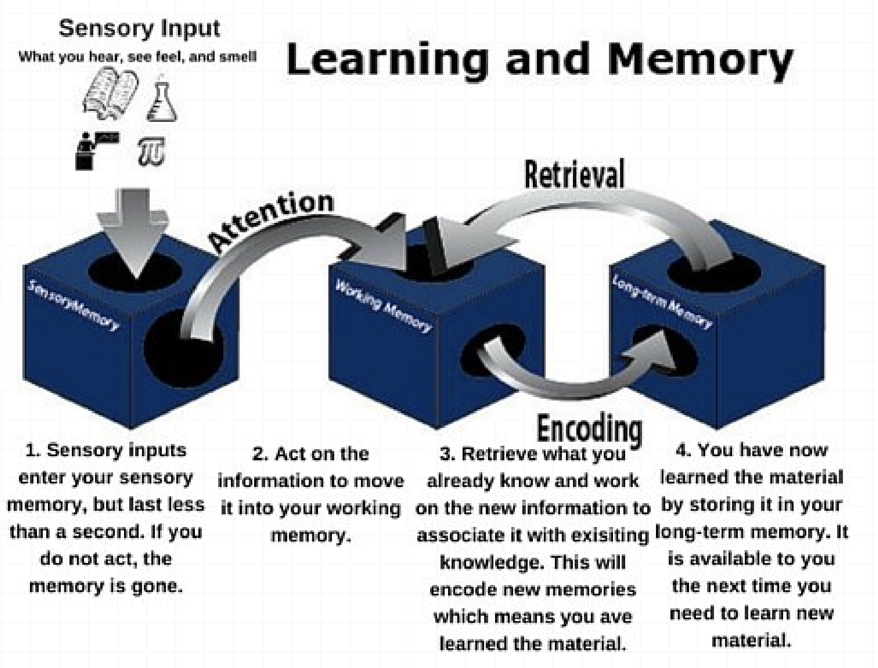

- In the human brain, the input comes from our senses (ear, nose, etc.) and gets processed by our brain

- Neurons chain together through synapses to create associations, memories, etc.

- Most people refer to the memory as something that they possess

- Memory doesn’t exist in the same way that a part of your body exists. It’s not physically present; rather, it is a concept that refers to the process of remembering

- The hippocampus and frontal cortex are responsible for analysing and processing sensory inputs - deciding if they are worth encoding before becoming part of long-term memory when being integrated as a single experience

- We process vast amount of information (perceptions) from our sense, so this is a critical process of what to keep or discard

- Each neuron chains together through chemical neurotransmitters and electrical signals (action potentials)

The AI Brain

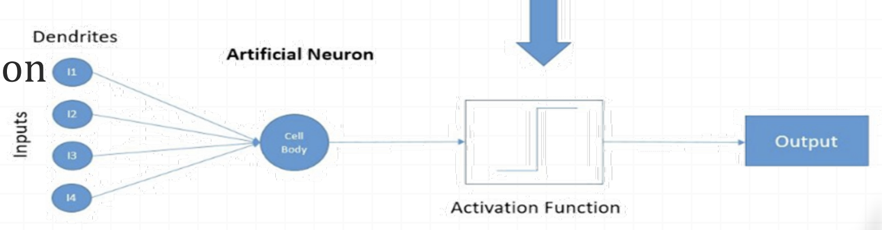

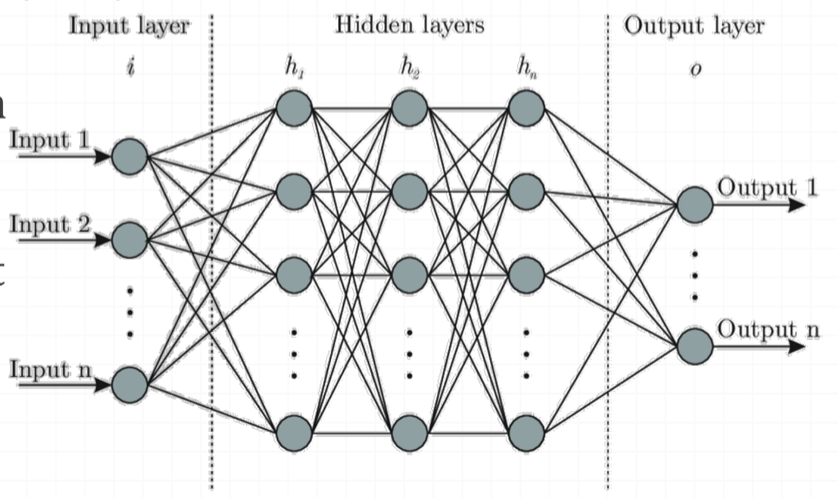

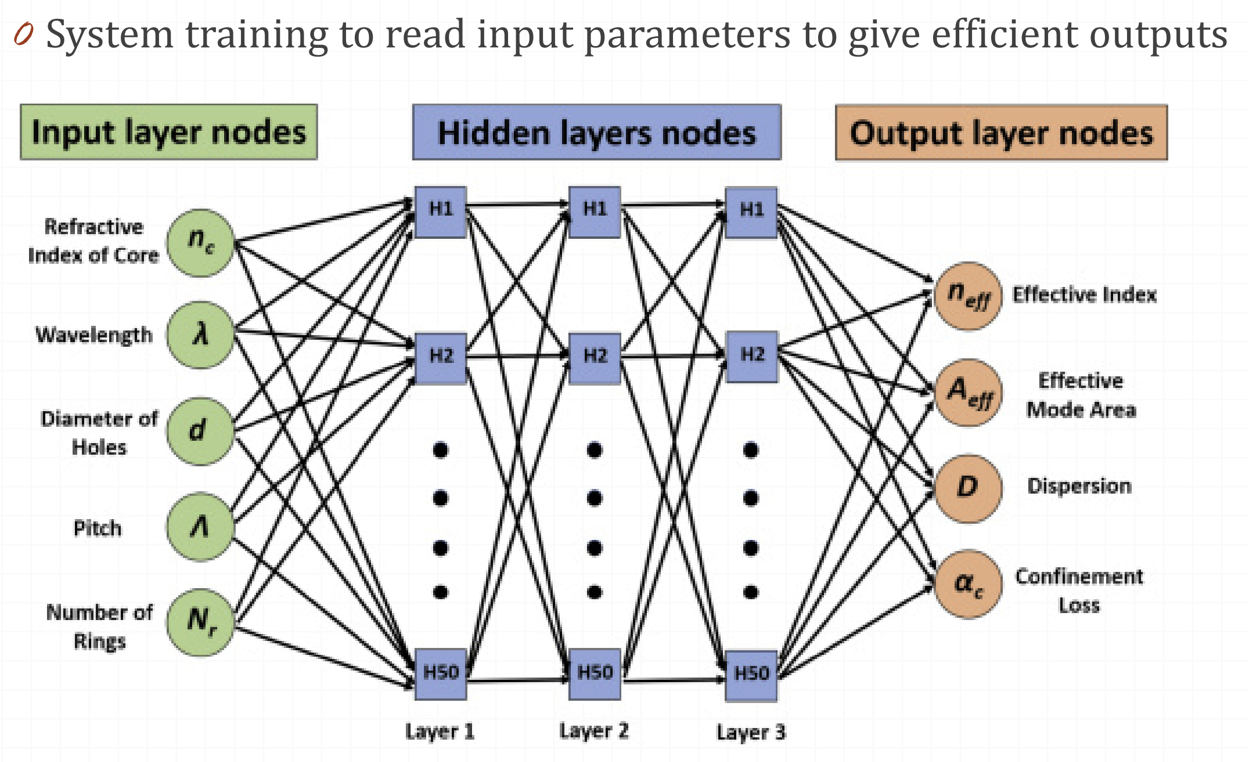

- In Artificial Neural Networks (ANN) inputs (I1, I2, I3, etc.) are independent variables (of each other) whereas the output is a dependent variable (dependent on the inputs)

- Synapses are the weights assigned to each input’s neurons

- By changing the weights neural networks learn which signal is important and these are the things that get adjusted during the process of learning

- Each artificial neuron can be connected to one or more inputs (depending on the training model)

- Each neuron connects to multiple other neurons, forming a connection chain, with the weightings being determined by the training function

- The hidden layers is where the input data is processed into something that can be sent to the output layer

- ML systems tend to be very opaque

Examples

Weights and Biases

- Imagines a handwriting system. We pass in a series of images of alphabetic letters (a-z) as a series of 20x20 pixels

- Each pixel of the image forms an input

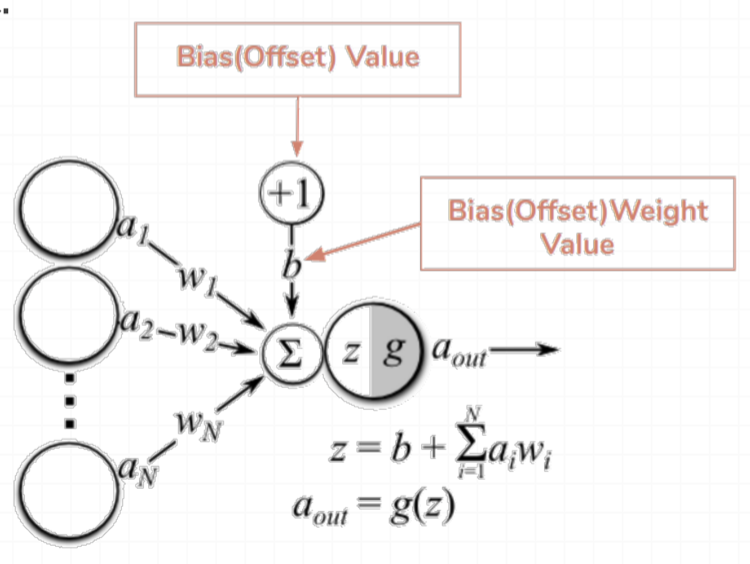

- Weights and biases (w and b) are the learnable parameters

- In an ANN, each neuron in a layer is connected to some or all of the neurons in the next layer

- When the inputs are transmitted between neurons, the eights are applied to the inputs along with the bias

- Weights control the signal (strength of the connection) between two neurons

- In other words, a weight decides how much influence the input will have on the output

- Biases are constant which are summed to the incoming weights to give some influence on the activation of the neuron

Activation Function

- This is a function that takes the summed values and makes a judgement on whether to activate the neuron or not, which in turn has influence over neurons feeding from it and, eventually, the output

- It is a critical part of the network and through adjustments to the weights and biases, the network is trained to trigger desirable outputs based on the layers of neurons