Task Scheduler §

The task scheduler is responsible for the smooth running of the CPU. Its primary goal is to ensure equal time is given to the CPU and that no particular process is given unwarranted processing time. Most importantly, that the CPU time is optimised.

Low level scheduler §

- co-ordinates processes, running time, etc, that are already loaded into memory

- Decides which process, in the ready queue gets CPU time and how much

- Process states:

- Start

- Ready

- Running

- Can go to Terminated or to Blocked

- Goes to terminated if it crashes or users terminates it or if its finished (burst time up)

- Blocked

- Blocked never goes back to running

- Terminated

Medium level scheduler §

- Handles the swapping in and out of processes where memory requirements are larger than available memory

- Also handles where a process is blocked and taking up valuable memory

High level scheduler §

- Deals with loading of programs/processes in and out of memory (first load, termination)

- Allocates memory resources

- Allocates partition of memory, paging, etc.

Key Terms §

CPU burst time §

- The CPU burst time is the time in milliseconds that a process requires to complete. Also called execution time. This is the time it remains ready (i.e. unterminated or not waiting for IO)

- Word processors have short burst time due to waiting or the keyboard, a video-transcoder would have a long burst time

Preemptive scheduling §

Scheduling mechanism whereby a process can be moved from running to ready while in mid-execution for other processes or high-importance tasks

- It is the responsibility of CPU scheduler to allot a process to the CPU whenever the CPU is in the idle state

- The CPU scheduler selects a process from ready queue and allocates the process to the CPU

- The scheduling which takes place when a process switches from running state to ready state or from waiting state to ready state is called Preemptive Scheduling

Non-preemptive scheduling §

Does not interrupt a process running, but instead waits for the process to complete its allotted CPU burst time or move into a waiting state

- On the other hand, the scheduling which takes place when a process terminates or switches from running to waiting for state

- This kind of CPU scheduling is called Non-premptive Scheduling

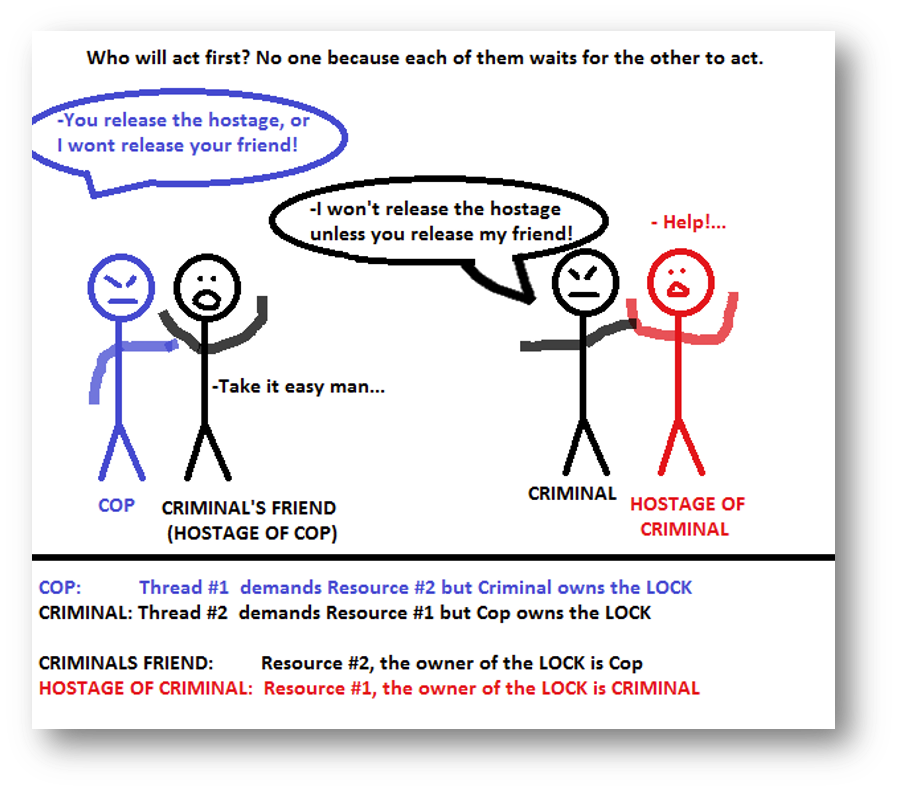

Deadlocks §

- Modern multithreaded OSs will allocate resources dynamically

- Deadlocks occur when two processes have exclusive locks on different resources, but then each need to access to other’s resource (e.g. hard disk).

- Neither can proceed, and it’s deadlocked

- The solution to this is to kill one or both of the processes

Types of Scheduling Algorithms §

Round Robin §

| Benefits | Drawbacks |

|---|

| Fair Time Allocation: Each process gets an equal share of the CPU time, which ensures a more democratic and fair system. | No Priority Consideration: No priority is given to the importance of a task, which can be problematic for time-sensitive processes. |

| Reduced Starvation Risk: As each process is given a time slice in turn, the chance of starvation (a process waiting forever / a long time) is significantly reduced. | Context Switching Overhead: Frequent switching between processes can lead to increased overhead, reducing overall system performance. |

| Responsive System: Suitable for time-sharing systems, as it gives the impression of parallelism and responsiveness. | Not good for Long Processes: Not ideal for longer processes as they get the same CPU time as shorter ones, potentially leading to low performance. |

Shortest job first §

| Benefits | Drawbacks |

|---|

| Efficiency in Job Processing: SJF tends to minimise the average waiting time for a set of requests, making it efficient for systems with many small jobs. | Starvation of Long-Running Jobs: Longer processes may suffer from CPU starvation if there are always shorter jobs available, as SJF prioritises shorter jobs over longer ones. |

| Optimal for Short Jobs: Ideal for scenarios where the majority of the jobs are short, as it quickly clears them from the queue. | Implementation Challenges: Accurately predicting the job length can be difficult, leading to inefficiency if predictions are inaccurate. |

| Reduces Wait Time for Short Jobs: Short jobs are processed quickly, which can be advantageous in environments where quick turnaround for small tasks is critical. | Not Ideal for Time-Shared Systems: In systems where (short) jobs continuously enter the system at a fast rate, long jobs might never get a chance to execute. |

| Simple Model: The basic idea of SJF is straightforward and easy to understand, which can simplify certain aspects of system design. | No Consideration for Priorities: SJF does not account for the priority of tasks, potentially delaying critical jobs in favor of shorter, less important ones. |

- The effectiveness of SJF depends heavily on the specific requirements and characteristics of the system it’s being used in

- For instance, in a batch processing system where job length can be predicted with reasonable accuracy, SJF can be highly effective

- However, in systems where job length is unpredictable or where there are many long-running jobs, SJF might not be the best choice.

First Come, First Served §

| Benefits | Drawbacks |

|---|

| Very Simple to Implement: This method is straightforward as it processes jobs in the order they arrive, without any complex logic. | Short Jobs can Become Stuck Behind Long-Running Jobs: Known as the “convoy effect”, short processes may have to wait a long time if a long process is being executed. |

| Predictable: The order of execution is clear and predictable, which can simplify debugging and process management. | No Priority Handling: Does not consider the priority of processes, leading to potentially critical tasks waiting behind less important ones. |

| Minimal Overhead: There’s minimal overhead since there’s no need for complex scheduling logic or frequent context (process) switching. | Inefficient for Time-Sharing: Not suitable for systems that require quick responses to interactive users due to the potential for long wait times. |

Shortest Remaining Time §

| Benefits | Drawbacks |

|---|

| Optimal for Short Jobs: Highly efficient for systems with many short jobs as it minimizes the waiting time for these processes. | No Reliable or Accurate Way to Determine Burst Time: Predicting the exact remaining time of a process can be challenging, affecting scheduling accuracy. |

| Dynamic Scheduling: Continuously evaluates the remaining time of processes, making it adaptable to changing workloads. | Long Processes can Starve: Longer tasks might suffer from starvation if shorter tasks keep arriving, similar to the Shortest Job First issue. |

| Reduces Average Waiting Time: Typically results in a lower average waiting time compared to other non-preemptive algorithms. | Overhead from Constant Rescheduling: Frequent decision-making and context switching can lead to significant overhead, impacting overall efficiency. |

Key Terms §

| Term | Definition |

|---|

| Time Quantum | The fixed time period a process is allowed to run in a round-robin scheduling algorithm. |

| Context Switching | The process of storing and restoring the state (context) of a CPU so a process can resume where it left off. |

| CPU Burst Time | The time period for which a process is continuously running on the CPU without blocking. |

| Multitasking | The ability of an operating system to execute more than one process or task simultaneously. |

| Process Control Block (PCB) | A data structure used by the operating system to store all the information about a process. |

| Average Wait Time | The average amount of time processes spend waiting in the ready queue before getting CPU time. |